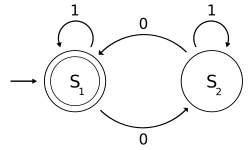

The first, and simplest, model of a computer is a state machine. A state machine has a finite set of states and inputs it can receive. It has a start state and at least one final state. Its next state depends on its current state and the input it receives. The language it accepts is the set of inputs that leads to a final state. For example, the machine to the right accepts the inputs ∅, 1, 00, 0101, and an infinite number of other inputs. All these inputs together define its language.

The first, and simplest, model of a computer is a state machine. A state machine has a finite set of states and inputs it can receive. It has a start state and at least one final state. Its next state depends on its current state and the input it receives. The language it accepts is the set of inputs that leads to a final state. For example, the machine to the right accepts the inputs ∅, 1, 00, 0101, and an infinite number of other inputs. All these inputs together define its language.All state machine languages have a similar form. They're called regular languages and are often used to pattern match a set of inputs, which are defined by regular expressions. For example, the regular expression (0 + (1(01*0)*1)* defines the language of all binary numbers divisible by three. Regular expressions are useful for find-and-replace-like operations, or for tokenizing text. Regular languages are the simplest possible language, since they are characterized by repeating a set of inputs.

The second class of machines are stack machines. A stack machine doesn't just store the current state; it also contains a stack of states that, along with the input provided, determines the next state. Otherwise, it's pretty similar to a state machine. This extra bit of space, though, lets stack machines recognize languages that state machines can't recognize. For example, while state machines can handle L(a*b*), or the set of strings having any number of a's and a's, they can't handle {anbn}, or the set of strings in which a's are followed by an equal number of b's.

Stack machines recognize recursive or context-free languages, which are more powerful than regular languages. The context free grammar for the language {anbn} is S -> aSb | e, where e is the empty string. Each S can be replaced with either aSb or the empty string. It is 'context free' because you can only replace an S with aSb when you already have an S there. Context free languages are surprisingly powerful. The rules governing formal sentence creation in almost all human languages can be expressed with a context free grammar. A sentence S can be replaced with a noun phrase (NP) and a verb phrase (VP), which can in turn be replaced with other particles of speech.

Finally, the third class of machines is the Turing machine. Unlike stack machines, Turing machines have a head which can read and write to a tape and can move in either direction. With this simple addition, we have the most powerful computer in the world. No more powerful model has been found. Turing machines can execute algorithms. They can recognize {anbncn}, as well as {a*b*} and {anbn}.

In fact, Turing machines are so powerful that they can run programs that are contradictory or that lead to infinite loops. Since Turing machines can understand complex languages, and since any language of sufficient complexity can have contradictory statements (such as 'This statement is false'), it's quite possible to feed computers inputs that make no sense. The classic demonstration of this is the Halting Problem. If it were possible to write a program that could tell you if another program got stuck in an infinite loop or completed, you could write an inverse of that program that went into an infinite loop if the program fed to it completed and completed if it never stopped. Feed this program to itself and you have a program that stops when it doesn't and doesn't stop when it does. Contradiction!

I've always found this argument a little sterile, but I think the important thing is that some things are uncomputable even if we're able to express them in code. These problems are 'recursively enumerable' but don't qualify as recursive languages, in which all inputs can be computed on any Turing machine. Recursively enumerable languages are semi-decidable, meaning that some inputs may complete but others will not.

Interestingly, while no more powerful computer than the Turing machine has been discovered, there is a fifth and final class of language outside the other four classes and which cannot be computed at all. These are a little hard to grasp, but the complement of a language that halts provides an example. In this language, a computer would run forever and then accept, which is obviously impossible.

Theoretical computer scientists like automata and formal languages because they can be studied with mathematical rigor. I'm not so interested in this. Instead, the connection between languages and machines makes me wonder about the kinds of languages that humans can understand. What kind of machines are we? Are there other languages out there we can't understand? What makes them languages? Are there computers that can understand languages that we can't? Are there more powerful machines than Turing Machines? Do we have the ability to invent them?

No comments:

Post a Comment