|

| Lightning fast! |

AltaVista was better than the alternatives for a few years, but it had trouble determining relevance. If you're searching for a recipe with certain ingredients, you don't want just any recipe; you want the best recipe. If you want to read about last night's game, you don't want just anyone's commentary; you want the best commentary.

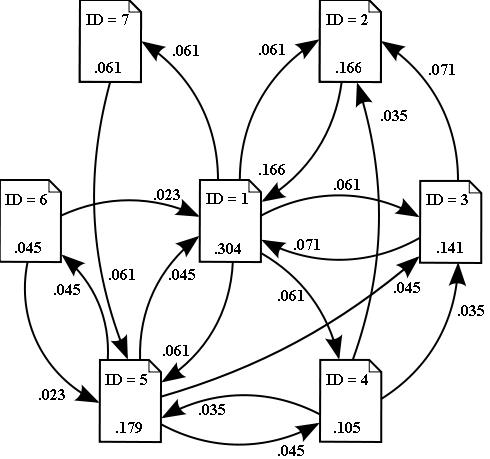

Enter Google's PageRank algorithm. PageRank takes authority into account, like Yahoo!, but it doesn't require a single source of information, like AltaVista. Pages that have more links to them are ranked higher than ones that don't. And the pages that link to these pages give an even higher ranking if many pages link to them. PageRank lets you find what the most important people are talking about.

|

| .304 (at the center) is the highest page rank in this contrived example |

It's a bit more complicated than this, but what is revolutionary here (and I use the term revolutionary sparingly, unlike, ahem, Apple) is that Google's algorithm did not try to reproduce the intelligence of the web that was already out there. Instead, it tried to capitalize on the accumulated intelligence of millions of individuals who had made a simple choice: to link to or not link.

Linking is a binary action that, when accumulated into a large network of linked pages, allows for an emergent kind of intelligence. Emergence happens when a simple set of rules add up to something greater than their parts. Ants, for example, are very stupid individually, but when they signal to each other using pheromonic rules that have evolved, some very complex behavior emerges. Similarly, neurons in themselves have no intelligence. They can either fire or not. But when you have a few hundred billion of them, you're on your way to self-consciousness. Markets too display emergent phenomena when the buying and selling of millions of people shape the direction of societies.

PageRank is just one of a few algorithms that have begun to capitalize on emergence. For decades, it was assumed that language translation software would have to know the rules of grammar of the source and destination language in order to decode and encode meaning appropriately. This was a miserable failure. The success of modern translation algorithms is that they use statistics to compare millions of existing translated documents. The algorithm knows that, for example, it has seen 'Je suis' much more frequently than 'Je es'. It doesn't need to know anything about grammatical rules.

|

| An ant fugue |

While we've made some tremendous advances in machine learning by fitting algorithms to emergent systems, the future of AI can't be algorithmic. It can't be only a set of rules. But it might be a set of rules that, when used in aggregate, lead to emergent and artificial intelligence. What set of rules could be this flexible? Perhaps only the binary links of neural networks.

Im no expert, but I believe you just made an excellent point. You certainly fully understand what youre speaking about, and I can truly get behind that.

ReplyDeletecheck google pagerank

Very interesting blog. Alot of blogs I see these days don't really provide anything that I'm interested in, but I'm most definately interested in this one. Just thought that I would post and let you know.

ReplyDeletepagerank

Nice to be visiting your blog again, it has been months for me. Well this article that i’ve been waited for so long. I need this article to complete my assignment in the college, and it has same topic with your article. Thanks, great share.

ReplyDeletecheck pr

Good idea, i like them

ReplyDeleteArrows RC airplane

Atten soldering gun

Dynam RC airplane

Kerui GSM alarm

FMS

Freewing RC jet

Hobbystar Motor

LX RC Jet